Though I would give an assembly Hello World a go, and get into some low level programming.

After reading up in MASM, NASM & FASM, I decided on MASM, and soon came across a great blog detailing how to set up VS2013 to run with MASM32.

After setting up the environment, and running the hello world app below, I noticed that this use of the MASM32 libraries seemed to vary greatly from the Assembly code I have previously seen that typically utilsie a series of 3 and 4 letter instructions mixed with memory addresses,

.386

.model flat, stdcall

.stack 4096

option casemap : none

include windows.inc

include masm32.inc

include user32.inc

include kernel32.inc

include macros.asm

includelib masm32.lib

includelib user32.lib

includelib kernel32.lib

.data

message db "Hello world!", "$"

.code

main PROC

print "Hello World!"

invoke ExitProcess, eax

main ENDP

END main

In my travels, the assembly I have glanced upon seemed to be much more like the below example, which I bumped into while I was setting up Visual Studio

.model small

.stack

.data

message db "Hello world", "$"

.code

main proc

mov ax, seg message

mov ds, ax

mov ah, 09

lea dx, message

int 21h

mov ax, 4c00h

int 21h

main endp

end main

I naively assumed that this was what MASM was like when you didn't utilise the MASM32 Libraries references in the first example. That was, until trying to compile the above code hit me with this...

1> Assembling source.asm...

1>source.asm(7): error A2004: symbol type conflict

1>source.asm(16): warning A4023: with /coff switch, leading underscore required for start address : main

As it seem this is a common mistake, replies at the masm32 forums, and stackoverflow pointed out the difference between 16bit MASM, and MASM32.

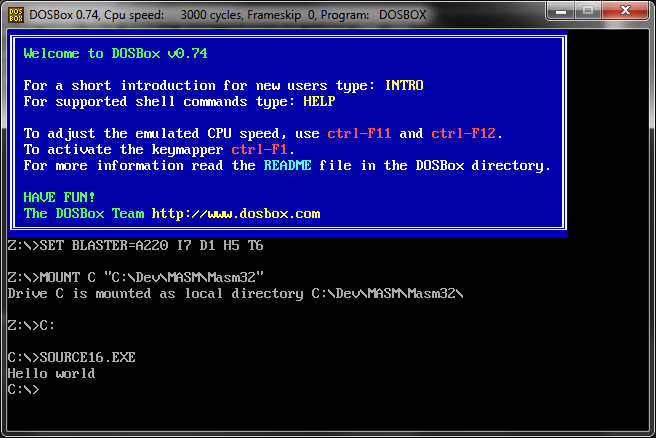

I still wanted to push forward with 16bit MASM, but with Win7 x64 not supporing 16bit, I figured I may have to use DOSbox.

I now knew I needed a 16bit Linker, and a bit of digging showed me that there was one in my MASM32 install. I tried looking in the project configuration, such as the Microsoft macro assembler to see if I could find a place to poitn to the linker16.exe, with no luck.

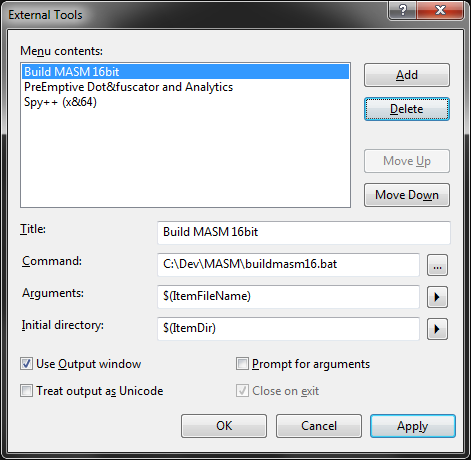

I then came across this very detailed article on both 16 and 32 bit set up in VS2012 by Kip Irvine.

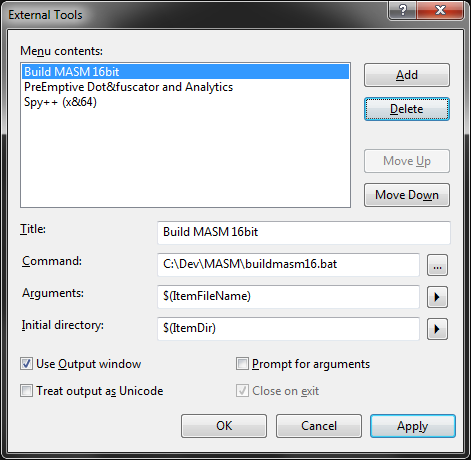

With his directions. I then went down the path of a batch file triggered by Visual Studio External Tools. Hoeever I wanted to dig a bit deeper and make my own batch file.

After finding a githib reference to the make16.bat in his tutorial, it seemed that he utilised ml.exe that was part of Visual Studio, not the MASM32 downloads. Running a modified version gave me the following error.

MASM : warning A4018: invalid command-line option : -omf

MSDN ML Command Line Reference advised me that this was due to my 64bit install.

Generates object module file format (OMF) type of object module. /omf implies /c; ML.exe does not support linking OMF objects.

Not available in ml64.exe.

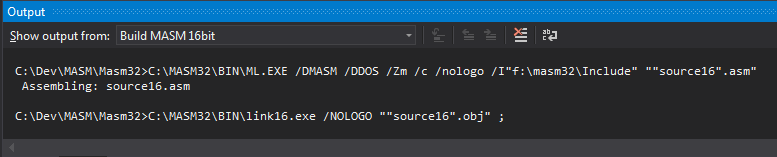

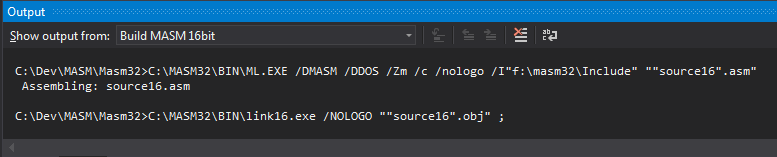

I decided to go with the ML.EXE installed with the MASM32 libraries, along with the commands I came across on stack overflow. I Modified the bat to utilise the args passed from VS External Tools.

ML.EXE /DMASM /DDOS /Zm /c /nologo /I"c:\masm32\Include" "%1.asm"

link16.exe /NOLOGO "%1.obj" ;

The semicolon I added at the end of the link16.exe args use default settings, so do not require input. Perfect if you want the build result in the VS output window instead of a DOS window.

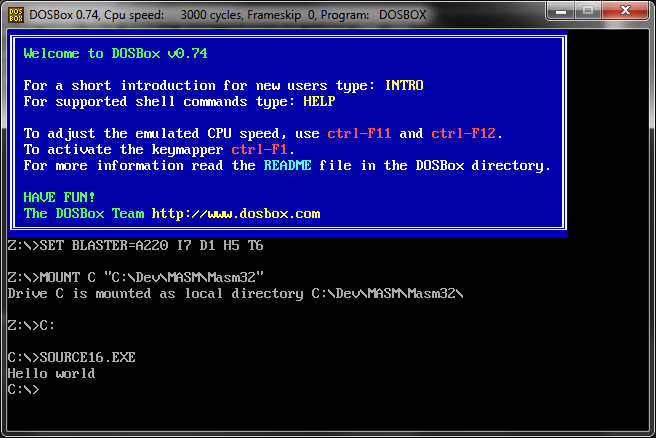

Now I got my MASM16 hello world assembled, I just needed a 16bit platform to run it.

I went with DOSbox as it has the command line arguments i was hoping for, so I could integrate it with VS External Tools.

I created the following batch file, accepting the filename from External Tools as %1.

"C:\Program Files (x86)\DOSBox-0.74\dosbox.exe" C:\Dev\MASM\Masm32\%1.exe

Though, it seems that External Tools wraps this in quotes, resulting in the location of the newly assembled exe for DOSbox to run, not being valid.

C:\Dev\MASM\Masm32>"C:\Program Files (x86)\DOSBox-0.74\dosbox.exe" C:\Dev\MASM\M

asm32\"source16".exe

The build scripts seemed to be ok with this, as it also ucrred there. However the following command trimmed the double quotes and allowed the exe to be passed into Dos Box

SET FILE=%1

SET FILE=%FILE:"=%

"C:\Program Files (x86)\DOSBox-0.74\dosbox.exe" C:\Dev\MASM\Masm32\%FILE%.exe

And success.